Great Article discussing how the military is taking advantage of machine vision technologies. Dr. Lee at Pyramid Imaging contributed to the content.

by Winn Hardin, contributing editor – AIA Posted 07/17/2018

On land, sea, and air, imaging systems are helping military organizations with surveillance and situational awareness improve their performance in tasks critical to command, control, communications, computers, intelligence, surveillance, and reconnaissance (CR4ISR).

Standards such as GigEVision, Genicam, and CoaXpress (CXP) are bringing unique benefits in image data delivery and compatibility, helping to keep warriors safe while reducing the cost of upgrading militaries to the newest technologies.

“In the past, most applications were point to point,” says Harry Page, President of Pleora Technologies Inc. “There are a wide range of predominantly proprietary approaches to support point to point applications, but increasingly the demand from end-users is for a standards-based networked approach to multicast video and data from multiple endpoints to processing, recording, and display units. This is resulting in a split in the military market.

“On one side, incumbent suppliers want to continue to introduce new, and often still proprietary point-to-point solutions that they completely control. It’s basically a land grab for these manufacturers, because once they sell a system, they get to support that system for many years. This approach is increasingly at odds with users, who want open architectures, commercial technologies, and networked solutions that meet real-time latency demands while delivering and multicasting sensor data across multiple systems.”

Polaris’ new MRZR-X illustrates this point. The MRZR X is a finalist in the U.S. Army’s unmanned equipment transport vehicle program. The vehicle can transport or support a squad while driving autonomously. According to Patrick Weldon, Director of Advanced Technology at Polaris, his company is adopting an open-architecture model to help speed the introduction of technology to the troops.

“The squad is now the best source of information in combat,” Weldon said, and an open architecture approach will help get that technology and information there.

Vision Standards for Mobile Military Platforms

According to Pleora’s recent white paper, Local Situational Awareness Design and Military and Machine Vision Standards, the features and capabilities of GigEVision and GeniCam align well with the requirements of emerging military vehicle data standards, such as those from the British Ministry of Defense (MoD) Vetronics Infrastructure for Video over Ethernet (VIVOE) Defence Standard (Def Stan 00-82) and the U.S. Department of Defense Vehicular Integration for C4ISR/EW Interoperability (VICTORY) initiative.

Pleora’s Page acknowledges that open standards-based network architectures potentially pose cybersecurity concerns compared to single-point solutions. However, he says “…instead of critical subsystems with a single point of failure, what we suggest is that every camera should be accessible through a service-oriented architecture. Through software, you can then define the video distribution services both inside and outside the vehicle.”

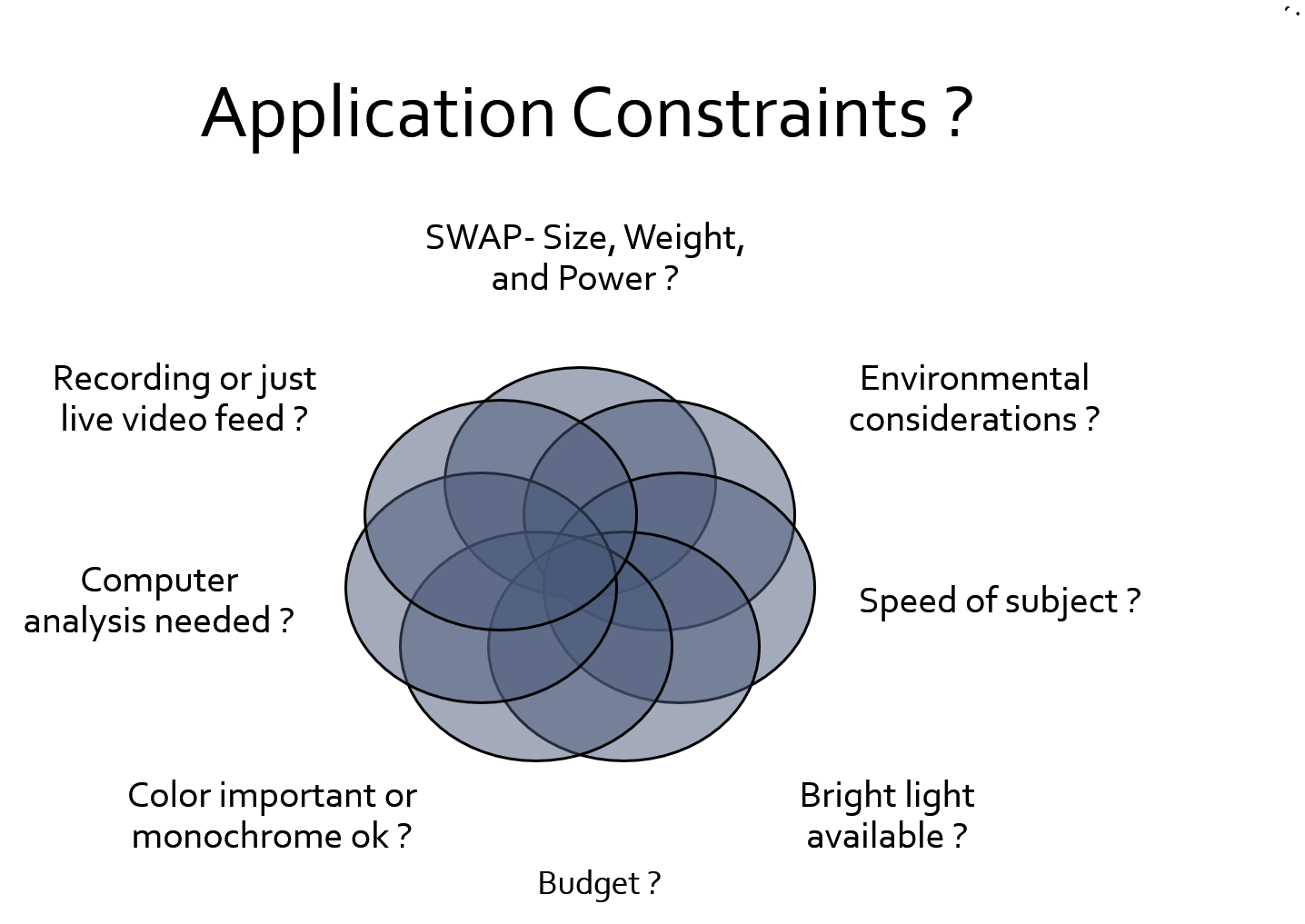

Moving from single point to an imaging network will help military vehicles handle the size, weight, and power (SWaP) challenges of even the largest battle tanks with heavy power requirements for electronic systems. When you add the redundancy of a network architecture, expandability, scalability, upgradeability, and the ability to integrate with other networked C4ISR subsystems, Pleora’s Page asserts that the efficiencies and increased operational effectiveness offset the security concerns, while freeing up resources for improving cybersecurity.

CXP and surveillance

While coaxial cable can be found in mobile platforms in addition to land-based platforms, the requirements for CXP “on the move” are different.

“A number of our frame grabbers have been used in drones,” says Keith Russell, President of Euresys, Inc., a manufacturer and supplier of image acquisition and processing components for machine vision and video surveillance applications. “[The military] choose CXP because of its low latency and the need for an autonomous drone and our support of ARM architectures for processing the visible and thermal imagery using low-power ARM processors.…

“[But] one of the things behind CXP is the large analog infrastructure that uses coaxial cable in military installations. And in many cases, multiple sensors will transport along that cable and CXP uses multiplexing to manage multiple data streams over a single channel.”

Bandwidth is another area where CXP can trump GigEVision networks, according to Dr. Rex Lee of Pyramid Imaging Inc. “The government still wants to use commercial off the shelf [COTS] wherever they can,” says Dr. Lee. “When we look at the components we sell into military applications between GigE, CXP and Camera Link, etc., it is about the same as the industrial side of our business.

“Basically, military customers only want to pay for what they need. So, if they need more bandwidth, CXP, which requires a frame grabber, can be a better solution than GigEVision, which doesn’t require a frame grabber. Or, in the case of 360-degree surveillance systems with slip ring and power supply, CXP-to-camera direct connections can have a lot of benefits. A single conductor with slip ring allows for cost-effective 360-degree surveillance using pan, tilt, zoom, and it only needs one cable.

“For applications that require 360-degree continuous surveillance, such as situational awareness applications for forward operating bases that identify nearby enemies by muzzle flares, high-resolution, high-speed cameras are a necessity. Pyramid Imaging’s embedded processing capabilities within smart cameras allow dramatic bandwidth reduction. Thus GigE Vision or single coax CXP can be used, offering much lower system costs,” concludes Dr. Lee.

As machine vision enables more machines to see and respond to their surroundings, managing imaging network data along with size, weight and power becomes more important to advanced mobile platforms. And it’s not just for the military. The same imaging adoption and need for network and power management are also attracting the attention of the consumer automotive market.

“The technical challenges of real-time video and data sharing for autonomous cars and military vehicles are very similar, but the markets are moving at a different pace,” says Ed Goffin, Marketing Manager at Pleora. “The military market made a concerted effort to standardize image networking for vehicles, and as a result there are now systems in the final stages of testing or early deployment. Some of the ‘lessons learned’ from the military market around standards, networking, processing, and maybe most important human usability should play a key factor in the ongoing evolution of autonomous car technologies.”

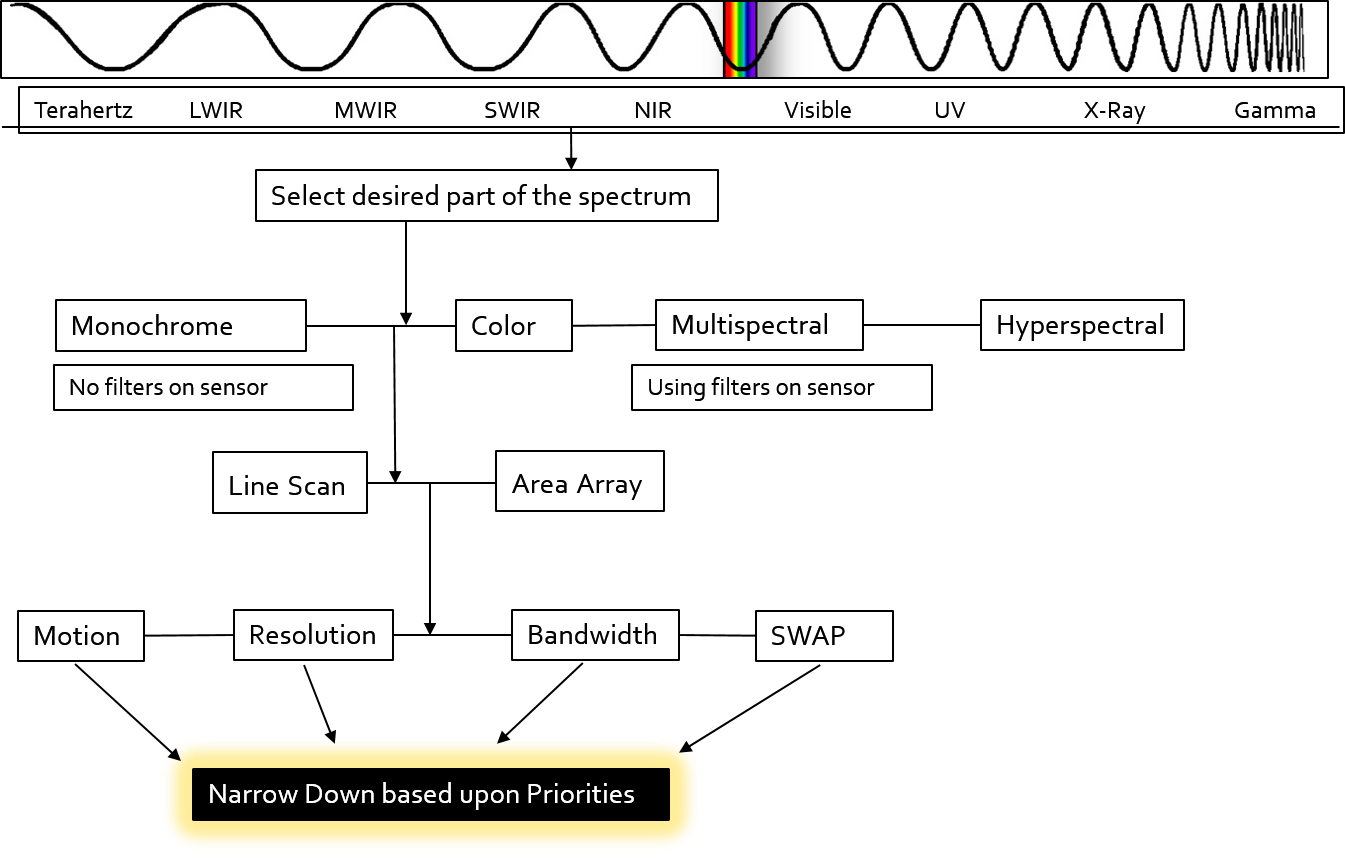

Imaging Problem? A Band Pass filter might be your answer. To find the right filter for the job, a broad spectrum white light and a bandpass filter kit will help you highlight various wavelengths. During testing each bandpass filter will achieve similar results as the matching LED wavelength. This process will help you to determine the appropriate LED wavelength necessary for your vision application. By viewing each resulting image each banpass provides, you can determine the best color for maximizing contrast and reduce interfering light. Your vision system may also benefit from other filters to help reduce glare, remove saturation or balance color.

Imaging Problem? A Band Pass filter might be your answer. To find the right filter for the job, a broad spectrum white light and a bandpass filter kit will help you highlight various wavelengths. During testing each bandpass filter will achieve similar results as the matching LED wavelength. This process will help you to determine the appropriate LED wavelength necessary for your vision application. By viewing each resulting image each banpass provides, you can determine the best color for maximizing contrast and reduce interfering light. Your vision system may also benefit from other filters to help reduce glare, remove saturation or balance color.